Text of AR Presentation for Dorkbot San Francisco

Anselm Hook

http://github.com/anselm

http://twitter.com/anselm

Quick notes for tonite:

Tomorrow night @sidgabriel is going to do http://www.meetup.com/augmentedreality with the extended hyper-posse. Please join. As well, if you really haven’t had enough then on December 5th is @ARDevCamp at the @hackerdojo as well. If you want to go to @ardevcamp it is free but you MUST register here. If that isn’t enough for y’all then I really can’t help you – we tried really hard to crush your meme.

Also – more personally – if you hate this then you’ll probably also enjoy hating this earlier post on Augmentia and this post on the Aleph No-Op and this one might push you over the edge if those fail Bimimetic Signaling .

Here we go….:

Are you going in or are you trying to get out?

Augmented Reality Redux

Who else wants to or is playing with AR apps? I’m assuming that most people are at least familiar with Augmented Reality.

Recently I started exploring this myself and I wanted to share my experiences so far.

As I’ve been working through this I’ve kind of ended up with a “Collection of Curiosities”. I’ll try to shout them out as I go as well.

My hope is to to encourage the rest of you to make AR apps also – and go further than I’ve gone so far.

What is Augmented Reality?

If I had to try to define it – I’d say Augmented Reality app is an enhanced interaction with everyday life. It takes advantage of super powers like computerized memory, and data driven visualization to deliver new information to you just-in-time. Ideally connected to and overlaid on top of what we already see, hear and feel.

Beyond this it can ideally watch our behavior and respond – not just be passive.

Observation #1: Of course there’s nothing really new here – our own memories act in a similar way – the fact that we can all read, decipher signs, symbols signifiers, gestures – we are already operating at a tremendous advantage. As my friend Dav Yaginuma says “a whole new language of gestural interaction may emerge”. Perhaps we’ll get used to seeing people gesticulating wildly on the streets at thin air – or even avoiding certain gestures ourselves because they will actually make things happen ( as Accelerando hints at in their Smart Air idea ). So what makes it interesting is that the degree of transition is like a phase transition between ice and water or between water and air as Amber Case puts it.

What are some examples?

1) You could be bored – walking down the street and see that a nearby creek could use some help. So you spend an hour pulling garbage out of it. And somehow understand that that was doing some good.

2) You could in an emergency situation, outside and maybe it is starting to rain, and maybe you’ve lost track of your friends. An AR view could hint at which way to go without being too obtrusive.

3) You could walk into a bookstore, pick up a book, and have the jacket surrounded by comments from your friends about the book.

Observation #2: The placemarks and imagemarks in our reality are about to undergo that same politicization and ownership that already affects DNS and content. Creative Commons, Electronic Frontier Foundation and other organizations try to protect our social commons. When an image becomes a kind of hyperlink – there’s really a question of what it will resolve to will your heads up display of mcdonalds show tasty treats at low prices or will it show alternative nearby places where you can get a local, organic, healthy meal quickly? Clearly there’s about to be a huge ownership battle for the emerging imageDNS. Paige and I saw when we built the ImageWiki last year and it must be an issue that people like SnapFish are already seeing.

My own work so far:

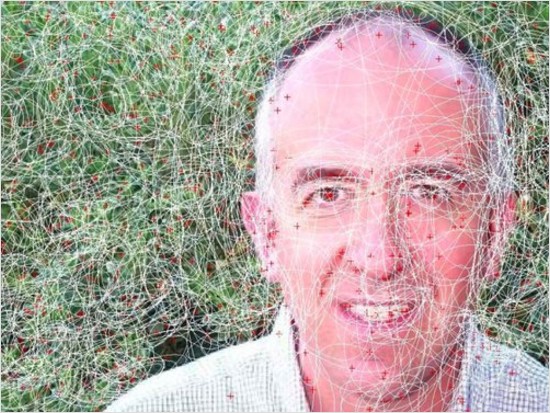

My own app is a work in progress and I’ve posted the source code on the net and you can use it if you want. Here’s what my first pass looked like about a week ago:

First of all I am being motivated by looking for ways to help people see nature more clearly. I’m concerned about our planet and where we’re going with it. Also I’m a 3d video games developer so the whole idea of augmenting my real reality and not just a video game reality seemed very appealing.

My code has two parts 1) server side and a 2) client side.

My client side app:

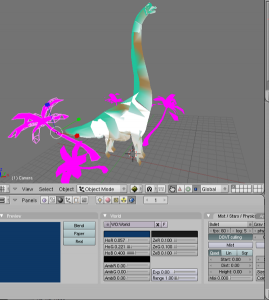

On the client side I let you walk around and hold the phone up and decorate the world with 3d objects.

1) You can walk around and touch the screen and drop an object

2) You can turn around and drop another object

3) If you turn back and look through the lens you can see all the objects you dropped.

4) If you go somewhere else you can see objects other people dropped.

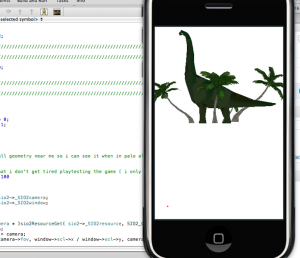

Here is a view of it so far :

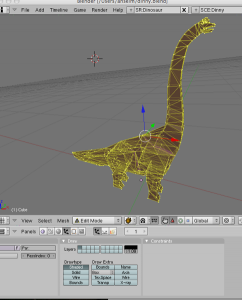

There were a lot of code hassles that I will go into below but basically loading and managing the art assets was the largest part of the work. Here is my dinosaur test model and in my warehouse scene:

Dinny from some dude who posted it to Google Sketchup Warehouse

Observation #3: I first was letting the participants place objects like birds and raccoons. But I ended up switching to gigantic 3d dinosaurs (just like the recently released Junaio app for iphone). The longitude and latitude precision of the iphone was so poor and I needed something really big that would really “work” in the shared 3d space. I suggest you design around that limitation for your projects too.

Oddly it was kind of coincidental but it shows the hyper velocity of this space – that something I was noodling was actually launched live by somebody else before I could even finish playing around – hat’s off to Junaio:

My server side app:

On the server side – I also started to pull in information from public sources to enhance the client view. I used two data sources – Twitter and Waze.

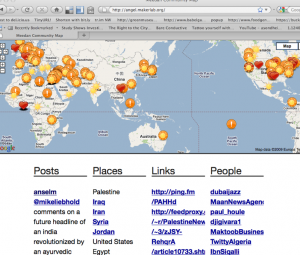

Geolocating some Twitter Data to help with an AR view.

Twitter has a lot of geo-locatable data and I started sucking that in and geo-locating it.

Curious observation #4: I found that I had to filter by a trust network because there is so much spam and noise there. So it shows how this issue of trust is still unsolved in general and how important social filters will be. Spam is annoying enough already – you sure don’t want it in a heads up display. Here’s a tip for AR developers – NEVER SHOW STARBUCKS!

Also I started collecting data from Waze (which is an employer). Waze does a real time crowd sourced car navigation solution for the iphone. Crowd source traffic accident reports for example. They don’t have a formal public API but they do publish anonymized information about where vehicles are. So I am now trying to paint contrails onto the map in real time to show a sense of life. I don’t have a screen shot of that one yet – but here’s the kind of data I want to show:

Waze data

Observation #5: Even twitter started to feel kind of not real time. So what was interesting here was to show literally real time histories and trajectories. It seems like this means animation, and drawing polygons and lines – and not just floating markers. I imagine an AR is a true enhancement – not just billboards floating in space. It started to feel more video game like and I feel AR comes more from that heritage than GIS

Overall Work Impressions

When I was at the Banff Centre earlier this summer a pair of grad students had an AR golf game. The game did not know about the real world and so sometimes you’d have to climb embankments or even fences to get at the golf ball if you hit it into the wrong area. This area is VERY rugged – their game was sometimes VERY hard to play:

Banff Centre

What was surprising to me is the degree of perversity and the kind of psycho-geography feel that the overlay creates – the tension is good and bad – you do things you would never do – the real world is like a kind of parkour experience.

In my project I had some similar issues. I had to modify my code so that I could move the center of the world to wherever I was. If I was working in Palo Alto, and then went to work in SF – all of my objects would still be in Palo Alto – so that was really inconvenient – and I would have to override their locations. I just found that weird.

I also found it weird how even if I facing a different direction in a room – it affected how long it took to fix an issue. Sometimes I wouldn’t see something, but it was just because it was behind me and I happenend to be sitting a different way.

Curious Observation #6: Making projects about the real world actually changes how you think. Normally if you are sitting at a desk and working, certain kinds of things seem important. But if you actually are standing and interacting and moving through real space And trying to avoid cars and the like. Then the things you think are important are very different. I think it is hard to think about an AR game or project if you are inside. And I think you have to playtest your ideas while actually outside, and try to remember them long enough to actually make a bit of progress, then go back outside and test them.

Implications redux

What’s funny about AR is that almost everybody is doing a piece of it – and now everybody has to talk. There are GIS people who think of AR as their turf. There are video game developers who think of AR as just a variation of a video game. There are environmentalists and privacy people and all kinds of people who are starting to really collide over the topic. All of the ambient and ubicomp people also see it as just a variation of what they’ve already been doing.

It’s also about action. At home in front of a computer you pretty much have most of what you need. In the real world you’re actually hungry, or bored or want to help on something – so it’s bringing that kind of energy – an Actionable Internet.

And spam in AR is really bad. Spam on the web or in email is mildly annoying – spam in AR is completely frustrating.

The crowd-sourcing group mind aspect is pretty interesting as it applies to the real world. It’s going to make it pretty hard to be a police officer – or at least those roles are going to have to change. I imagine that officers might even tell people where they’re placing radar guns so that people can help by being more cautious in those areas.

I also really like the idea of how it is a zero click interface – I think that’s really appealing. I use my phone when I am walking around all the time – but it can be frustrating being distracted. I kind of imagine the perfect AR interface that shows me one view only and only of what I really care about – and I think that’s probably where this is going. It is not like the web where you have many websites that you go to . I think it will be more just one view – and everything in that view ( even though those things come from different companies or people ) will have to interact with each other amicably. I’m really curious how that is going to be accomplished.

Also – I think it’s not just a novelty. As I was working through this I started to see what other people were doing more clearly. And I started to get the strong impression that folks like Apple and Google are actually not just aiming to provide better maps or better data but actually trying to aim at a heads up displays where local advertising was blended into the view. I get the sense that there’s a kind of hidden energy here to try and own what we see of as the “view” of our reality – so I expect the hype to actually get even bigger than it is now.

AR involves our actual bodies. In a video game you’re not at risk. Even the closest thing I can imagine – an online dating site – your profile can be anonymous. But if you’re dealing with an AR situation there are real risks; stalking, traps, even just hunger, boredom and exhaustion.

Conclusion of cursory comments

There is something about how it implicates our real bodies. I guess I don’t really know or understand yet but I am finding it fascinating. And I also find it much closer to my deep interests in the world, and in being outside, standing up and interacting with a rich rich information space rather than just a computer machine space. I appreciate computers but I also love the real world and so mixing the two seems like a good idea to me. If we are our world and our world is our skin then in a sense we’re starting to deeply scrawl on our skin. What could possibly go wrong?

I asked Google to find something that would indicate this idea of writing on skin – and this is what Google told me was going to happen. I’m sure it will be alright.

More Technical Code Parts and Progress

The entire work so far took me about a week and a half. This was in my spare time and it was quite a lot of late nights. I had just started to learn the iPhone and I am sure as the weeks progress this work will get much better much faster.

The overall arc was something like this:

0) NO INTERFACE BUILDER. The very first piece of code I wrote was to build iPhone UI elements without using their ridiculous interface builder. IB means that people who are building IPhone apps cannot even cut and paste code to each other but must send videos that indicate how things connect. The entire foundation of shared programming is based on being able to “talk” about work – and having to make or watch a video – at the slow pace of video time – is a real barrier. So for me my first goal was to programmatically exercise control of the iPhone. This took me several days and was before I started on the application proper. Here is one case where I would have largely preferred to use Android.

1) SENSORS. I wrote a bare bones OpenGL app without any artwork and read the sensors and was able to move around in an augmented reality space and drop boxes. This took me a couple of days – largely because I didn’t know Objective C or the iPhone. On the bright side I know know these environments much better than I ever wanted to.

2) ART PIPELINE. First I tried using OpenGL by hand but this became a serious limitation in terms of loading and managing art assets. I tried a variety of engines and after much grief settled on the SIO2 engine because it was open source and the least bad. Once I could actually load up art assets into a world I felt like I had some real progress. This only took me a day but it was frustrating because I am used to tools that are of a much higher caliber coming from the professional games development world.

3) MULTIPLE OBJECT INSTANCING. One would imagine that a core key primary feature of a framework like SIO2 would be to allow multiple instancing of geometry – but it had no such feature – it was surprising. I largely struggled with my own inability to comprehend how they couldn’t see it as a highest priority. Some examples did do this and I cut and pasted code over and removed some of the application specific features. I still remained surprised and that ate up an entire two days to comprehend – I basically was looking for something that wasn’t built in… still surprises me actually.

4) CAMERA POLISH AND IN HUD RADAR. I spent a few hours really polishing the camera physics with an ease in and ease out to the target for smoother movement, and making the camera position and orientation explicit ( rather than implicit in the matrix transform ). This was very important because then I was able to quickly throw up a radar view that gave me a heads up ( within the heads up ) of my broader field of view – and this helped me quickly correct small bugs in terms of where artifacts were being placed and the like.

5) SERVER SIDE. While working in Banff at the Banff Centre briefly this summer I had written a Locative Media Server. It itself was basically a migration of another application I had written – a twitter analytics engine called ‘Angel’. Locative is a stripped down generic engine designed to let you share places – in a fashion similar to bookmarking. I dusted off this code and tidied it up so that I could get a list of features near a location and so that I could post new features to the server. While crude and needing a lot of work – it was good enough to hold persistent state of the world so that the iPhone clients could share this state with each other in a persistent and durable way. Also, it is very satisfying to have a server side interface where I can see all posts, and can manage, edit, delete and otherwise curate the content. And as well, it was an unrelated goal of mine to have a client side for the server – and so I saw this as killing two birds with one stone . Now the currently running server instance is being used as the master instance for this app and it is logging all of the iphone activity. Once the server was up it became easy to drive the client.

http://locative.makerlab.org

http://angel.makerlab.org

6) IPHONE JSON. Another example of where the iPhone tool-chain sucks is just how many lines of code it takes to talk to a server and parse some JSON. I was astounded. It took me a few hours – mostly because I couldn’t believe how much of a hassle it was and kept looking for simpler solutions. I ended up using TouchJSON which was “good enough”. With this I was finally able to issue a save event from the phone up to the server and then fetch back all the nearby markers. This is a key for a shared Augmented Reality because we want all requests to be mediated by a server first. Every object has to have a unique identifier and all objects have to be shared between all instances – just like a video game. I’ve done this over and over ad nauseum for large commercial video games so I knew exactly what I wanted and once I fought past the annoying language and toolchain issues it pretty much worked first try. It does need improvements but those can come later on – I feel like it is important to exercise the entire flow before polishing.

7) TWITTER. I also fetched geo-located posts from twitter and added them as markers to the world as well. I found that this actually wasn’t that satisfying – I wanted something that showed more of an indication of real motion and time. This was something I had largely already written and enabling it in the pipeline was just a few minutes work.

8) WAZE. With my own server working I could now talk to third party engines and populate the client side. Waze (an employer of mine) graciously provided me with a public API method to access the anonymized list of drivers. Waze in general is a crowd sourced traffic navigation solution that is free for a variety of platforms such as iPhone and Android. I fetched this data and added it to the database and then I was able to deliver that to the client so that I could show contrails of driver behavior. This is still a work in progress but I’m hoping to publish this part as soon as I have permission to do so ( I am not sure I can release their data API URL yet ).

9) FUTURE. Other things I still need to do include a number of touch ups. The heading is not saved so all objects re-appear facing the same way. And I should deal with tilting the camera up and down. And I should let you post a name for your dinosaur via a dialog. Perhaps the biggest remaining thing is to actually SEE through the camera lens and show the real world behind . Also I would like to move towards providing an Augmented Reality OS where I can actually interact with things – not merely look at them.

Code Tool chains

I must that the tools for developing 3d applications for the iPhone are quite poor by comparison to the kinds of tools I was used to having access to when I was doing commercial video games for Electronic Arts and other companies.

The kinds of issues that I was unimpressed with were

1) The iPhone. XCode is a great programming environment and the fact that it is free, and of such high quality clearly does help the community succeed. There are many iPhone developers because the barriers to entry are so low. But at the same time Objective C itself is a stilted and verbose language and the libraries and tools provided for the iPhone are fairly simple. For people coming from the Javascript or Ruby on Rails world they won’t be that impressed by how many lines of code it takes to do what would be considered a fairly simple operation such as opening an HTTP socket, reading some JSON and decoding it. What is one or two lines of code in Javascript or Ruby is two or three pages of code in Objective C. For example here are some comments from people in the field on this and related topics:

http://kosmaczewski.net/2008/03/26/playing-with-http-libraries/

http://cocoadev.com/forums/comments.php?DiscussionID=259

2) Blender is the status quo for free 3D authoring. It’s actually quite a poor application. It’s interface largely consists of memorizing hot-keys and there is a lot of hidden state in sub-dialogs. Perhaps the biggest weakness of Blender is that it doesn’t have an UNDO button – so it makes making mistakes very dangerous. I once spent an hour finally figuring out how to convert an object into a mesh and painting it and I accidentally deleted it and it was completely gone. Even things that claim to be working such as oh, converting an object into a mesh, often as not simply do not and do not provide any feedback as to why. It’s intriguingly difficult to find the name of an object or change it, or to see a list of objects, or import geometry or merge resource files. Features that are claimed such as importing KML seem to fail transparently. There are many old and new tutorials that are delightfully conflicting – and often their own Wiki is offline or slow. It really does require going slowly, taking the time to read through it and memorizing the cheat sheets:

http://wiki.blender.org/index.php/Doc:Manual/3D_interaction/Navigating

http://www.cs.auckland.ac.nz/~jli023/opengl/blender3dtutorial.htm

http://download.blender.org/documentation/oldsite/oldsite.blender3d.org/142_Blender%20tutorial%20Append.html

http://download.blender.org/documentation/oldsite/oldsite.blender3d.org/177_Blender%20tutorial%20Game%20Textures.html

http://www.keyxl.com/aaac91e/403/Blender-keyboard-shortcuts.htm

Blender came out of researching how to get objects into the OpenGL on the iPhone – at the end of the day it was the only choice pretty much.

http://www.blumtnwerx.com/blog/2009/03/blender-to-pod-for-oolong/

http://iphonedevelopment.blogspot.com/2009/07/improved-blender-export.html

http://www.everita.com/lightwave-collada-and-opengles-on-the-iphone

3) SIO2. I first rolled my own OpenGL framework but managing the scene and geometry loading was too much hassle so I switched to an open source framework. SIO2 provides many tutorials and examples and at least it seems to compile build and run and is reasonably fast. But it also has several limitations. The online documentation is infuriatingly empty – while they’re proud of having documentation for all classes and methods the documentation doesn’t actually say what things DO – it just describes the name of the function and nothing else. And many of the tutorials conflate several concepts together such as multiple instancing AND physics so that one is unsure of orthogonal independent features. Also the broken english through-out creates a significant learning curve. It is free but it needs a support community to come around it and to improve not the core engine but the support materials. SIO2 works closely with blender and has a custom exporter – some of the tutorials on youtube show how to use it ( the text documentation magically assumes these kinds of things however ). Although I hate watching video tutorials it is a pre-requisite to actually learning to work with the SIO2 pipeline. Overall now after a few days of bashing my head against it I can finally use it with reasonable confidence.

http://sio2interactive.com/SIO2_iPhone_3D_Game_Engine_Technology.html

http://sio2interactive.wikidot.com/sio2-tutorials-explained-resource-loading

4) Google Sketchup appears to be the best place to find models. I couldn’t actually even get Google Sketchup to install and run on my new MacBook Pro so I ended up using a friends computer to dig through the Google Sketchup Model Warehouse and import a model and then convert it to 3DS. I ended up having to use Google Sketchup PRO because I couldn’t find any other way to get models from KMZ into 3DS or some other format supported by Blender. The Blender Collada importer fails silently and the Blender KML importer fails silently. The only path was via 3DS.

http://sketchup.google.com/3dwarehouse/details?mid=f95321b35c2817bdaef005b7f8d10dde&prevstart=12

http://www.katsbits.com/htm/tutorials/sketchup_converting_import_kmz_blender.htm

5) Camera. One of my big goals which I have not hit yet is to see through the camera and show the real world. On the iPhone this is undocumented – but I do have some links which I will be researching next:

http://hkserv.ugent.be/boudewijn/blogs/?p=198

http://www.developerzone.com/links/search.html?query=domain%3Amorethantechnical.com

http://code.google.com/p/morethantechnical/

http://mobile-augmented-reality.blogspot.com/2009/11/iphone-camera-access-module-from.html

Technical Conclusions

My overall technical conclusions are that Android is probably going to be easier to develop applications for than the iPhone. I haven’t done any work there yet but the grass seems very green over there. The language choices of using Java seems like it would be a more pleasing, simple and straightforward grammar, and the access to lower level device data such as the raw camera frames seems like it would also help. Also since there are fewer restrictions in the Android online stores it seems easier to get an app out the door. As well it feels like there is more competition at the device level and that devices with better features are going to emerge more rapidly for the Android platform than for the iPhone. I think if I had tried to do this for the Android platform first that it would have been doable in 2 to 3 days instead of the 7 or 8 days that I have ended up putting into it so far.

My overall general conclusion is that AR is going to be even more hyped than we see now but that it will be hindered by slow movement in hardware for at least another few years.

I do think that we’re likely to see real changes in how we interact with the world. I think today the best video that shows where I feel it is going is this one ( that isn’t even aimed at this community per se ) :

AICP Southwest Sponsor Reel by Corgan Media Lab from Corgan Media Lab on Vimeo.